VM-Vault

In the past few months, I've been working on few projects related to virtualization and it makes sense to publicly share one of them. This project, VM-Vault, consists of a small hypervisor and a small virtual machine which are together used to protect cryptographic keys against memory disclosure vulnerabilities. An atacker, able to read any data in the Linux kernel virtual memory, would not be able to reveal cryptographic keys while the cryptographic keys can still be used for encryption or decryption.

My Master thesis

With my recent adventures in SVM-based virtualization, it made sense to revisit my Master thesis on protecting kernel cryptographic keys inside an encrypted virtual machine (text available here). In my thesis, the virtual machine's memory is encrypted via the feature AMD Secure Encrypted Virtualization (SEV) which makes the cryptographic keys inaccessible to the outside world. Communication of keys and encryption requests was achieved via the VirtIO (and vhost) interface. By using this solution, users of the the Linux Kernel Crypto API, like dm-crypt, could transparently have their keys be protected against the exploitation of memory disclosure vulnerabilities.

While the design achieved the goals of protecting key material against memory disclosure vulnerabilities, it suffered from resource under-utilization and high memory usage. This renders it impractical because likely nobody would use a solution which degrades disk encryption by 50% and uses over one gigabyte of memory.

The huge memory overhead and performance degradation in my thesis solution are both directly linked to my choice of using abstractions and to my idea of re-purposing an exisiting implementation to achieve something else. Namely, I relied on the existing virtualization stack (QEMU, KVM, etc.) and used a general-purpose kernel inside the VM: both choices simplify the implementation by relying on the provided abstractions but don't make sense at the end because they provide too much functionality and solve too many problems. The memory overhead accumulates from the usage of QEMU, OVMF, Linux kernel and a minimal user space. The performance degradation comes from the necessity to copy data between unencrypted and encrypted memory for when AMD SEV is used.

We don't need any of these abstractions and existing infrastructure to solve the problem of hiding some memory while still being able to operate with it. This goal has already been done in various ways (Tresor, Amnesia, Copker, Mimosa, etc.) with varying success. However, I would still like to rely on virtualization for my solution because virtualization achieves a natural isolation mechanism.

Presenting VM-Vault

VM-Vault protects host cryptographic keys against memory disclosure vulnerabilities by storing the keys in its internal memory which is never directly readable or writeable by the Host kernel. The VM-Vault Hypervisor ensures that the VM's physical memory is never mapped into any other address space but into that of the VM. This measure prevents the host kernel from ever directly reading the stored cryptographic keys or the generated round keys from memory. To ensure that round keys are not leaked during operation with them, interrupts are disabled.

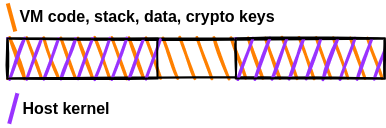

The figure above depicts the idea. VM-Vault can access the memory marked in orange, which is all of physical memory. However, the Host can directly access only the memory marked in purple, which happens to be all of memory without the memory used by VM-Vault. Thus, the host cannot directly access the stored keys via memory accesses without modifying the page tables.

Performance of handling encryption requests is important to have VM-Vault be of any practical use. To ensure that encryption and decryption throughput is high, VM-Vault does not perform any additional memory copies between different address spaces. Instead, VM-Vault performs address translation for the host memory ranges and works directly with the physical addresses of data.

The figure above shows address translation for an encryption request. The host kernel operates with data which spans a virtual address range but the data may be mapped onto multiple physical ranges. This creates a problem because VM-Vault is designed to work with host physical addresses, and thus this virtual range needs to be split into its corresponding contiguous physical ranges. In this case, the virtual range is split into two physical ranges. VM-Vault can directly work with physical addresses because all of physical memory is linearly mapped into the VM's address space.

It may seem that the split makes the implementation inconvenient and slow, but Linux cipher modules already receive a scatterlist for encryption/decryption requests. Thus, all of the work of splitting the virtual range into physically contiguous sub-ranges is already done anyway. Address translation may also come almost for free if the buffer's virtual address comes from the Linux direct-map. In this case, translation of a Host virtual address into a VM-accessible virtual address would require one integer subtraction and one integer addition.

Life of an encryption request

Let's examine the taken steps for serving an encryption request, so that some more light is shed onto VM-Vault and so that some performance issues become more apparent.

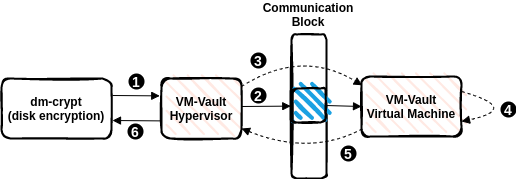

The figure above shows the steps which are taken to complete an encryption request which was made from a Linux kernel module. In this example, the request is sent from the dm-crypt kernel module, but similarly a user space process can issue such requests via the ioctl interface. In step 1, dm-crypt sends an encryption request via the Kernel Crypto API to the current cipher implementation, which happens to be the one provided by VM-Vault. The VM-Vault Hypervisor takes the request in step 2, parses the data segments and populates the necessary information into the communication block. There are many communication blocks, one for each CPU logical core, to be able to serve requests from each core in parallel. In step 3, the hypervisor resumes the VM-Vault Virtual Machine which is the only entity having access to the cryptographic key for the corresponding request. The VM takes the request in step 4. and encrypts the data using its own AES implementation based on the AES-NI extension. After encryption was performed, the VM performs a VM-exit (using the vmgexit instruction) in step 5 to pass execution to the VM-Vault Hypervisor. Finally, in step 6 the hypervisor returns success to the callee.

This design adds extra steps (e.g. context switch) which definitely contributes to higher latency for an encryption request. This latency is measured in the next section.

Performance

Having a solution which protects kernel cryptographic keys against memory disclosure vulnerabilities is great, but this solution needs to provide similar performance to this of the standard AES cipher implementation in Linux. If performance is not similar, then this solution is rendered impractical for use cases like disk encryption.

When measuring "performance", I considered both throughput (how many megabytes of data can be encrypted per second) and latency (how much time it takes to serve one request on average). High throughput is necessary to ensure that a large quantity of data (large encrypted partition) can be decrypted and read as fast as possible. Low latency is desired to ensure that a small encryption/decryption request (network packet, message) can be done and processed as fast as possible, otherwise the user may observe high latency in dependent applications.

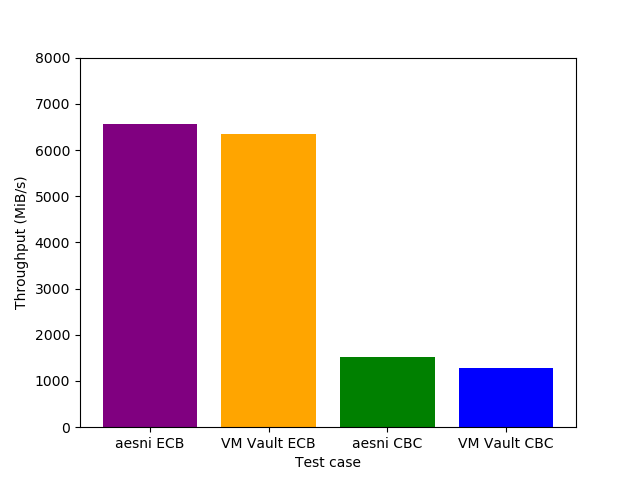

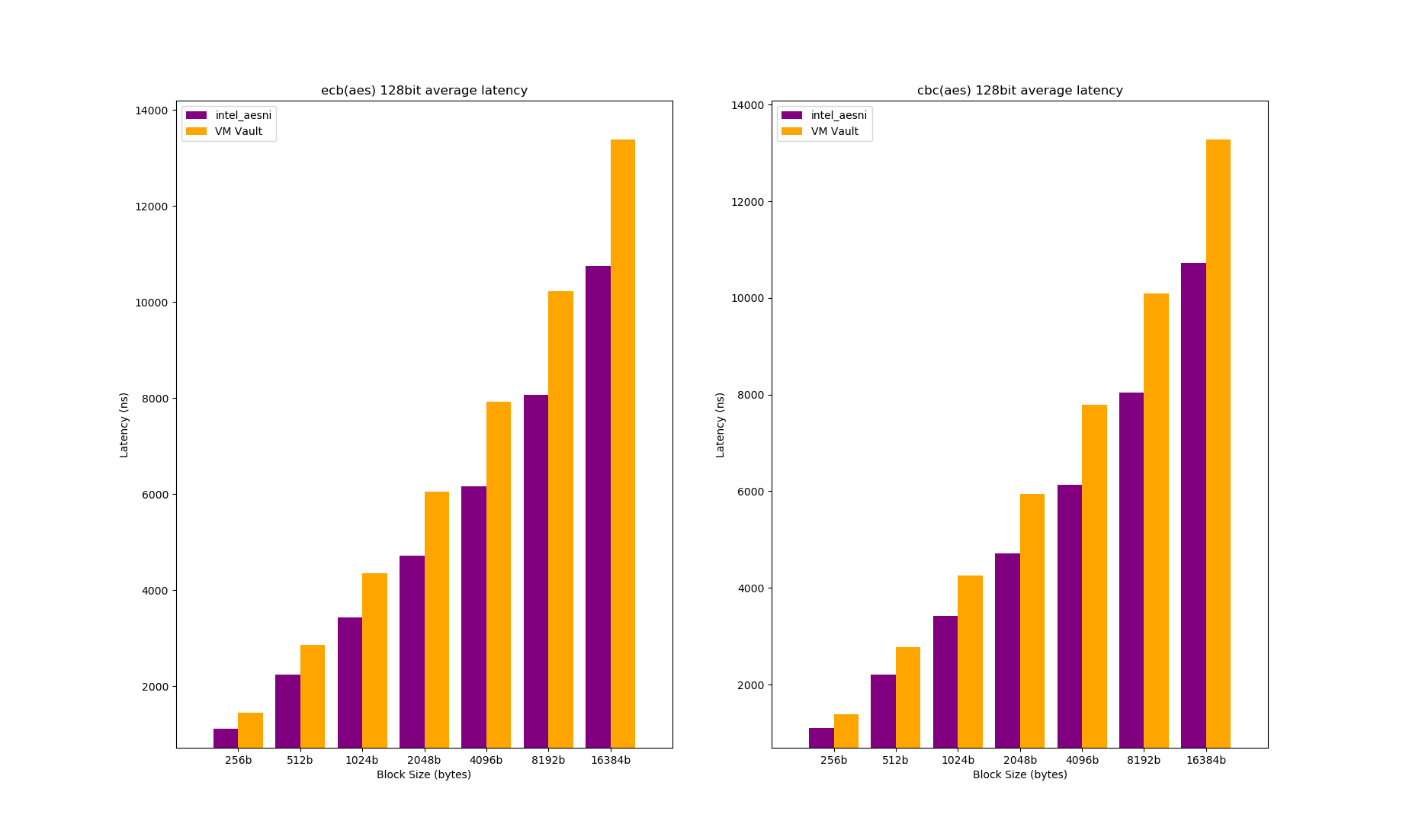

To measure throughput and latency, I micro-benchmarked VM-Vault against the intel_aesni Linux module for ECB(AES) and CBC(AES) with a 128-bit-long key. The micro-benchmark loads a kernel module which uses the Linux Kernel Crypto API directly. Throughput is measured by encrypting 1024 buffers of size 4 MiB each (total 4 GiB). Latency is measured for different request sizes (256 bytes to 16 kilobytes) and is reported as the average latency of 128K encryption requests.

Shown in the figure above, throughput is only slightly lower than that of intel_aesni which is very encouraging for the practicality of VM-Vault. Similar throughput means that the solution is applicable to cases where high throughput is of the highest importance, like disk encryption.

The figure above shows the average latency for ECB(AES) and CBC(AES). The average latency is noticeably higher when VM-Vault is used which renders VM-Vault less practical for processing encrypted network packets.

The observed performance degradation for VM-Vault is expected and driven by three main factors. First, switching from the host kernel context to the VM-Vault context incurs a penalty, and this penalty is definitely observed in the results of the latency micro-benchmark. Second, the VM-Vault AES implementation was done by me using the AES-NI extension but may not be as efficient as the one provided by the intel_aesni module. Third, requests need to be iterated to, address ranges need to be translated and the information needs to be stored into the VM-Vault-accessible communication block.

From the first and third point, I reason that a single encryption request would exhibit a higher latency: the context switch and the extra request processing would add some latency. The second point can be the primary reason why throughput is similar but not as high as that of the aesni_module.

Security

The primary goal of VM-Vault is to protect cryptographic keys and the derived round keys. To verify that this goal is achieved, I designed a security test which searches for the round keys in the virtual address space of the kernel. The test only passes if the keys are not found, and fails otherwise. To simplify the search, I made use of nested virtualization which allows one to start a hardware-assisted virtual machine inside another virtual machine. This simplifies the test because the search for the keys can be performed by using the QEMU monitor, or even better gdb-pt-dump.

The keys were not found in the mapped memory of the Host kernel. This is expected because the memory utilized by VM-Vault is explicitly removed from the Host kernel's direct physical map by using the newly added secret memory area capabilities In Linux.

Issues and Future Work

VM-Vault already achieves its primary goal with reasonable performance, but still some improvements can be made in the areas of usability and extra security.

VM-Vault relies on address space separation and changes to the host page tables to protect cryptographic keys against memory disclosure vulnerabilities. It is possible to protect the cryptographic keys against cold-boot attacks if SEV or SEV-ES support is added to VM-Vault (check Issue 4). The largest hurdle is having access to an SEV/SEV-ES capable CPU because both are reported to be only supported on the AMD EPYC CPUs.

One usability issue of VM-Vault is that it cannot currently co-exist with KVM (another very popular hypervisor). The reason for this is that both expect to be the only present hypervisor and maintain the AMD SVM state as its only user. I expect that VM-Vault can workaround the issue without any significant performance degradation, and thus allow VM-Vault and KVM to co-exist.

At the time of writing, only ECB and CBC modes are implemented, but there are no technical limitations preventing VM-Vault to support more modes. For one, it would be great to add CTR mode support to VM-Vault.

Project Code and Building It

Source code is available at https://github.com/martinradev/vmvault.

If you have issues building the project, please try to check the makefiles and build script. It's probably related to one of the few hardcoded build paths, or potentially to a different kernel version.

If you plan to use VM-Vault inside a virtual machine, you would need to follow the standard process of creating a VM file system image and VM Linux kernel. I may provide a working image for testing purposes at some point.

Final words

Does this make sense? Maybe not, but it was a fun technical exercise.

If you have any questions or improvements, you can can create a github issue or contact me on twitter.

This project has been in the work for some time, and it's related to my Master thesis I did at TUM. I would like to thank Christian Epple, Felix Wruck, Michael Weiss from Fraunhofer AISEC for giving feedback for my thesis in our occasional meetings.